Publications

Interactive Cloud-based Global Illumination for Shared Virtual Environments

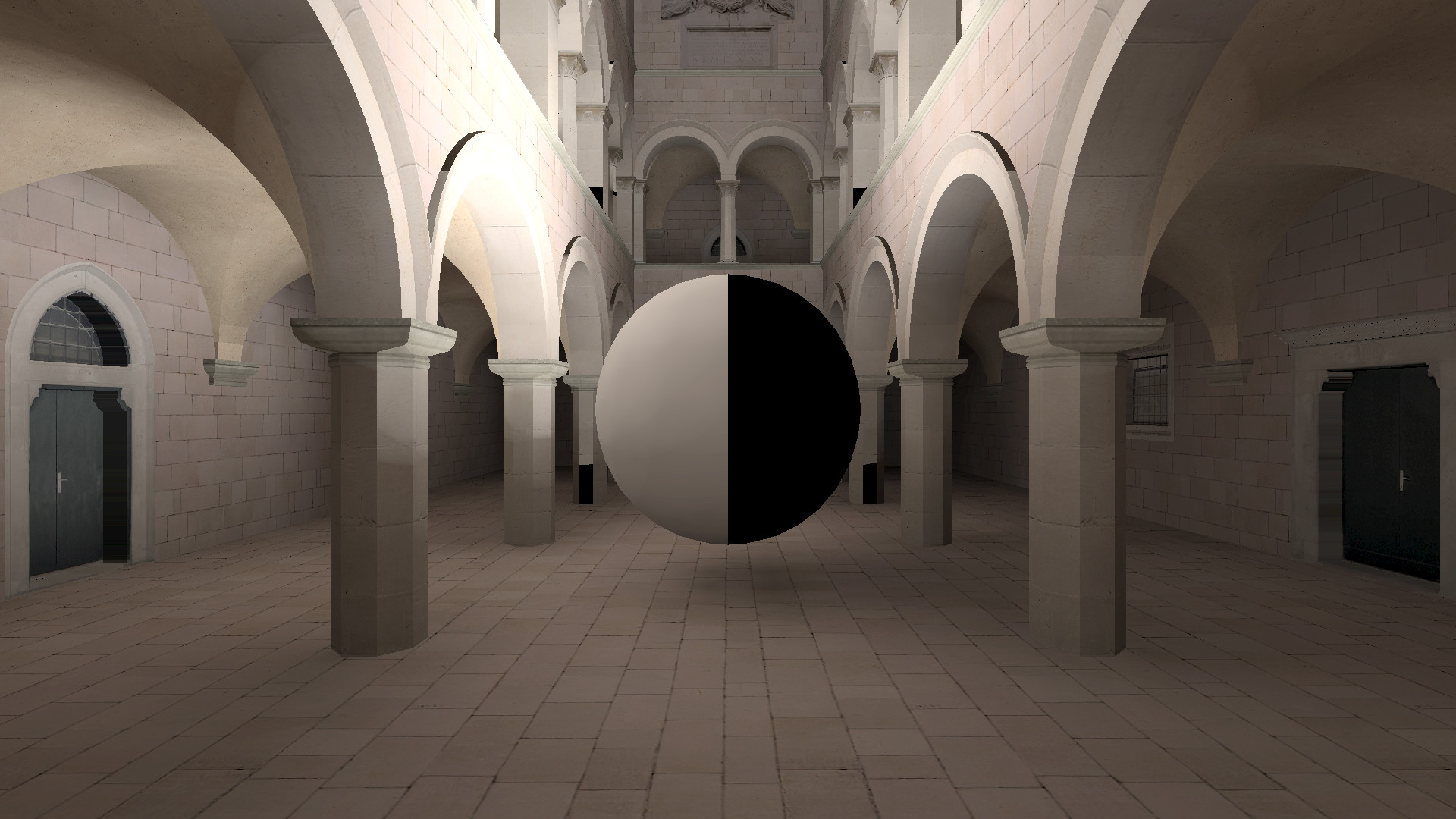

Real-time high-fidelity rendering requires the use of expensive high-end hardware, even when rendering moderately complex scenes. Interactive streaming services and cloud gaming have somewhat mitigated the problem at the cost of response lag. We present a cloud-based distributed rendering pipeline for shared virtual environments that eliminates response lag and is capable of interactive global illumination on a wide gamut of client devices. Results show that the method is scalable, has low bandwidth requirements and produces images of comparable quality to instant radiosity.

VS-Games, 2019.

Cloud-based Dynamic GI for Shared VR Experiences

We extend the method used in Interactive Cloud-based Global Illumination for Shared Virtual Environments to produce a distributed rendering pipeline that eliminates response lag and provides cloud-based dynamic GI for low-powered devices such as smartphones and the class of devices typically used in untethered VR headsets.

IEEE Computer Graphics and Applications, 2020.

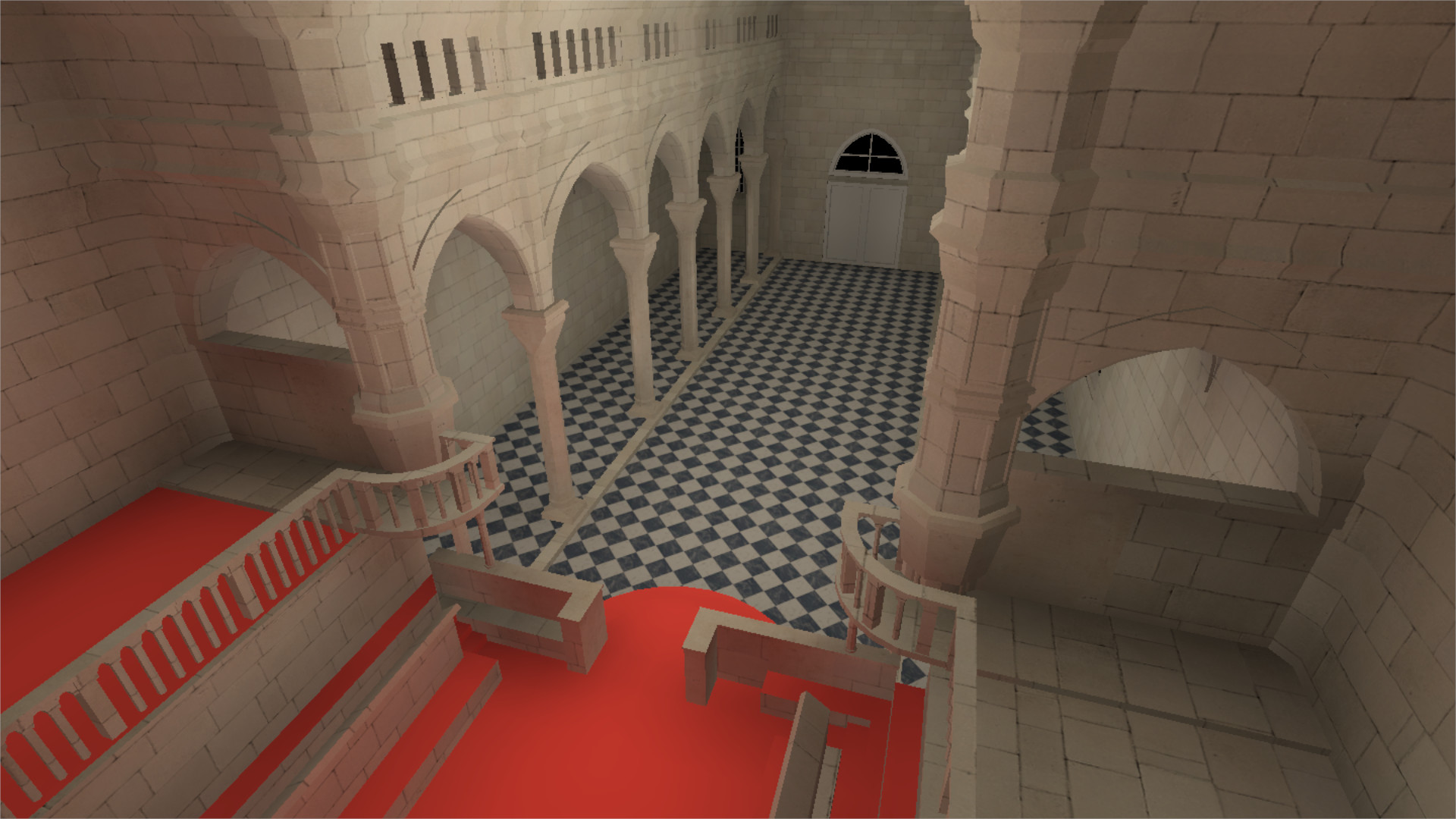

Atlas Shrugged: Device-Agnostic Radiance Megatextures

We propose a novel distributed rendering pipeline for highly responsive high-fidelity graphics based on the concept of device-agnostic radiance megatextures (DARM), a network-based out-of-core algorithm that circumvents VRAM limitations without sacrificing texture variety. DARM was evaluated on its effectiveness as a vehicle for bringing hardware-accelerated ray tracing to various device classes, including smartphones and single board computers. Results show that DARM is effective at allowing these devices to visualise high quality ray traced output at high frame rates and low response times.

Proceedings of VISIGRAPP, 2020.